|

Subscribe / Renew |

|

|

Contact Us |

|

| ► Subscribe to our Free Weekly Newsletter | |

| home | Welcome, sign in or click here to subscribe. | login |

Architecture & Engineering

| |

|

October 3, 2019

The AEC industry has a data problem

ZGF Architects

Stokes

|

Machine learning has been enjoying a healthy amount of press of late, with most industries touting the promise of limitless intelligence as the antidote to every company’s biggest challenges.

And many industries are indeed well-equipped to improve their processes with machine learning. Google, Tesla and others are fully utilizing artificial intelligence to improve their products, and they have been doing it for years.

So why is the AEC industry — and architecture in general — slow to follow suit?

Unfortunately, machine learning isn’t a magical portal through which our most vexing challenges are automatically solved by pulling a single lever. The shininess and popularity of machine learning and AI in general make it an alluring talking point, but the key is data. Data itself is also often oversimplified into a talking point, and the AEC industry continues to lag behind in the quantity and quality of data that is routinely gathered.

Rather than generally appreciating data, successfully leveraging machine learning requires us to scientifically identify the problems we are trying to solve, and then strategically gather the data to quantify and then solve that particular problem with clear intent and rigor.

Laying the foundation

In the past year at ZGF Architects, we very quickly learned that attempting to use machine learning to solve a problem without first investing heavily in rigorous and intentional data collection was the very definition of putting the cart before the horse. Further still, intentionally collecting data that was relevant to solving a problem wasn’t really the beginning of the process.

Machine learning at its core isn’t really any different from human learning. We use everything we’ve experienced throughout our lives to inform the solutions and opinions we form every day.

When training a machine learning algorithm, these same concepts apply. It is up to us, the handlers of an algorithm, to give it the historical context it needs to solve a problem. We dictate the concept of right and wrong by showing the algorithm examples of good and bad solutions, with the hope that it will eventually identify good solutions on its own.

But what is the difference between right and wrong in the context of architecture? The parameters for success are more muddied in our industry than, say, the realm of self-driving cars or email spam-blocking. So we went in search of meaningful data from within our existing process.

Training grounds for AI

To better understand a client’s current state and their future design needs, and to evaluate our own work once a building is complete, we routinely complete occupancy evaluations. The process has traditionally entailed collecting feedback through surveys distributed to the occupants.

The occupancy evaluations were a good start for our data-gathering efforts, but the purely qualitative nature of occupancy evaluations is now only part of the picture. To gain even a rudimentary understanding of the effects of a designed space on a survey participant, we quickly realized that our survey responses, especially for workplace typologies, had to be geotagged.

By analyzing responses within the context of a floor plan, and by pairing them with objective environmental data from workplace sensors (air quality, temperature, circulation, and more), we realized we could use machine learning to find possible correlations between specific qualities of a building and the satisfaction of the occupants within it — connections that we would might not have thought to explore otherwise.

We are currently in the process of deploying our first geotagged survey on a client project and will be repeating the survey quarterly for the next year. Moving forward, the potential insight could help us make more informed design decisions than ever, rather than relying on rules of thumb or intuition alone.

Hypothetically, if a person complains about the quality of amenities in an existing office building, we could explore whether the person’s desk location is the culprit (maybe they sit too far from amenities to use them), or whether low rates of amenity use overall (as measured by foot traffic) signals that the amenities are indeed subpar.

If a particular group of employees who work in an open office environment report having trouble concentrating at their desk, we could compare that team’s feedback to the experiences of a group reporting higher satisfaction in a similar setting. In this case, we would have identified a mismatch between workplace environments and certain job profiles, in which case the open office concept isn’t implicated, and the problem can be addressed by more strategically locating certain teams in the new space.

Rethinking our approach

We have also had to retool the way we collect our data. Previously, occupant evaluation responses were housed in discrete project folders.

So we created a centralized, standardized SQL (structured query language) database where of all our occupancy evaluations to date now live. A SQL database allows us to cross-reference multiple projects and identify trends across our entire portfolio of work — and by project typology — rather than just focusing on one discrete project at a time and then archiving it.

Our theory is that a number of years from now we will have ingested enough relevant and consciously gathered data that a machine learning algorithm might be able to identify correlations between the way we design buildings and the wellness and satisfaction of the occupants.

This means that we are focused on the long game: building a good foundation of data to train a machine learning algorithm, rather than building a machine learning solution that uses unhelpful or unorganized data to produce unhelpful results.

Our test bed

We are, however, already deploying machine learning on a different scale. One promising avenue of exploration is computer vision. We recently set up cameras in multiple locations within our Seattle office.

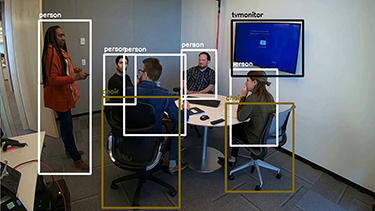

The camera feeds anonymized data into our machine learning-based tool that is trained to recognize thousands of objects, and can categorize them — chair, table, TV monitor, person — as well as their position in space and the time the event occurred. The speed and resolution of the data, relative to capturing it by hand with traditional occupancy evaluations, is extremely high.

The tool’s potential uses include observing the number of chairs in a space and comparing that to the number of occupants to determine utilization rates, identifying televisions in a space and tracking the amount of time they are on as a reflection of amenity utilization, as well as measuring the flow rates of circulation spaces such as stairways and corridors.

The ability to have a single piece of inexpensive hardware (a camera) track and understand a wide variety of objects, occupants and behaviors becomes an incredibly powerful way to quantify the usage and overall success of a space.

Previously, we might have relied on Microsoft Outlook calendar data to gauge how often a given conference room might be booked (an indicator of whether there were too many or too few collaboration zones in an office for the number of people working there). But as we all know, people often book spaces on a recurring basis when they don’t necessarily need to, and not everyone who accepts a meeting invite actually shows up.

We continue to use our office as a test bed and will continue to assess the viability of tools like this one before deploying them on a client project.

Ultimately the resulting data, like occupant satisfaction feedback, is bolstering our understanding of the effects our spaces have on the occupants that live and work within them — and not just through observation, but scientific rigor.

Dane Stokes is ZGF Architects’ computational design specialist. He is based in Seattle and supports firmwide project teams and R&D initiatives.

Other Stories:

- Why electric scooters could be a game changer for Seattle

- CLT: construction’s lean, green beauty queen

- What our evolving mobility options mean for designers and developers

- New OSU-Cascades academic building will aim for net zero

- Mass timber is bringing the warmth of wood to the workplace

- With so many tech advances, why has AEC productivity growth stalled?

- Survey: Cary Kopczynski & Co.

- Survey: Weber Thompson

- Survey: Johnston Architects

- Survey: Bohlin Cywinski Jackson

- These 3 Northwest projects show how listening to clients pays off

- Which project delivery methods work best?

- How understanding building codes can help you get more out of your project

- How successful workplaces offer more than just a place to work